Rate Book Testing

This case study follows a rate book testing product. We wanted to create a product that could provide a transparent, easy-to-run set of tests to verify the content of rate books, and to help the user identify problems.

The user was a pricing actuary or rating agent. These roles involve using modelling software to determine changes in rating and corresponding test data. The product would allow the user to upload a test case file, set parameters, run the test and track the progress on a dashboard.

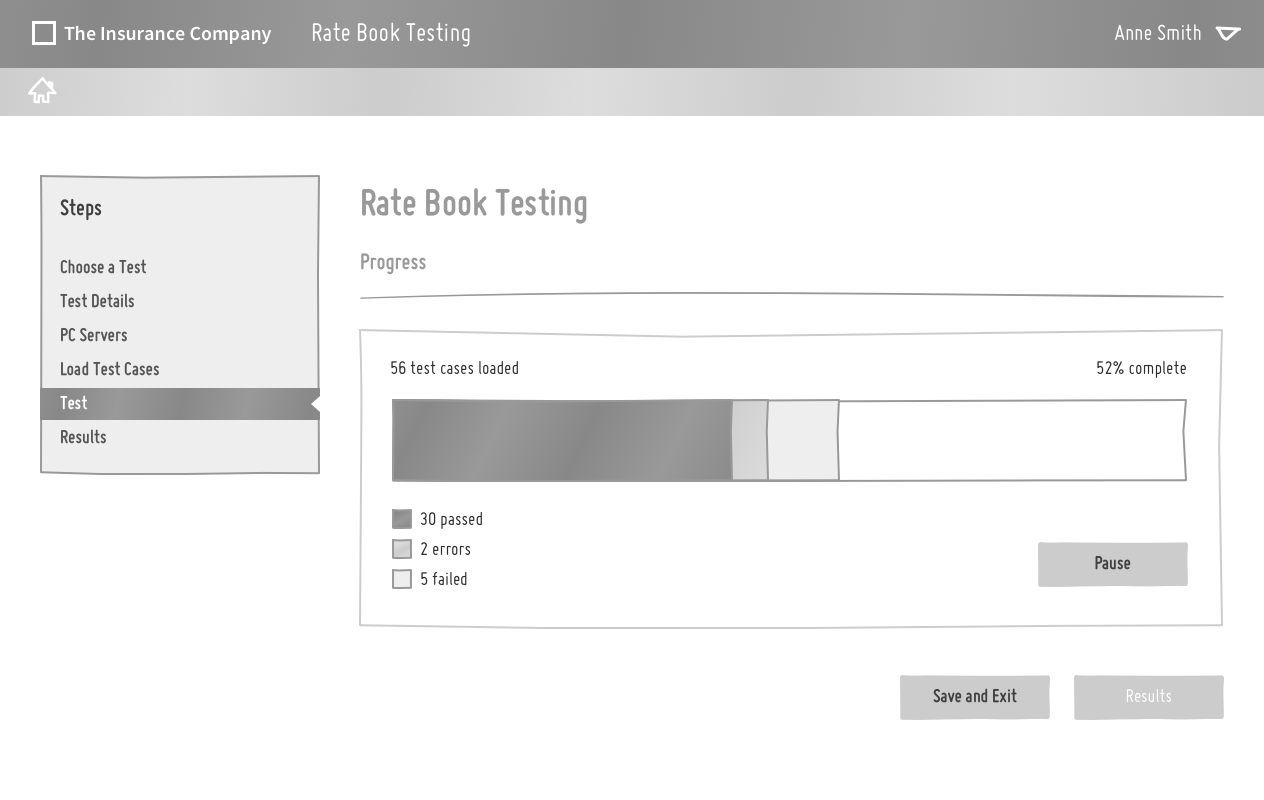

I created a set of wireframes to explore how the flow would work and get an idea of what kind of interface we’d need. This client was still building their UX maturity, and didn’t yet have a system of user testing in place, but we got the wireframes in front of the project manager and a subject matter expert to at least get some perspective on the project.

This feedback led to a number of improvements including additional instructions on the parameters setup page and test results being presented on just one page instead of two.

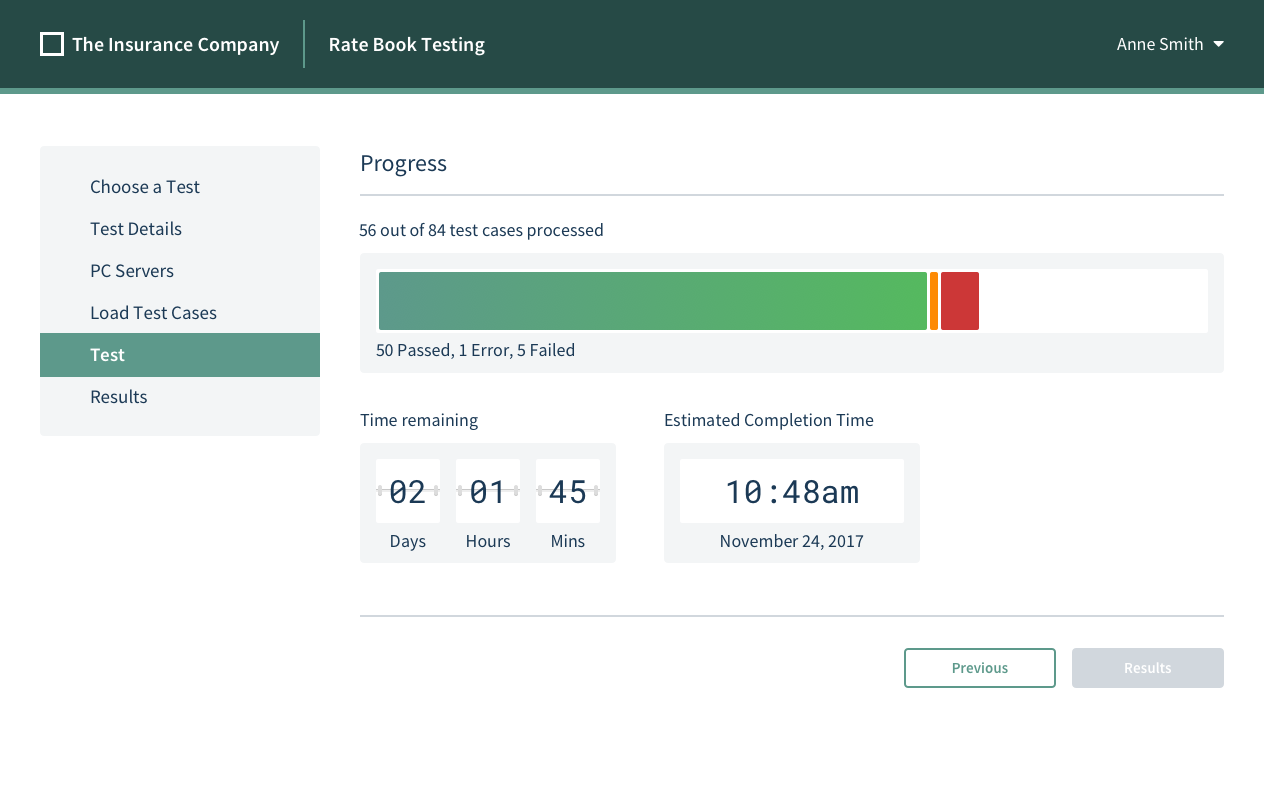

We learned that running the tests themselves could take hours or even days, so I added extra details to the progress page—to help mitigate frustration, help the user to feel informed about the process, and plan out the rest of their day.

The technical requirements for this project were very well defined, but the user behaviour was not as clear. Ideally, the project would get some rounds of user testing in the wild to get more information. User interviews would be beneficial, but for a niche product like this, a site visit would probably be necessary to get the full picture. Some follow-up questions:

- How long do files take to upload?

- How long does the actual testing phase last?

- How often are tests abandoned?

- Are there any additional parameters that would be useful to set?

- What is the larger context that the user is operating in?

- What other processes are happening in their day to day activities?